Posted 01 Apr 2007

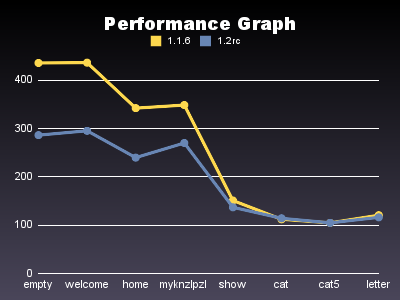

Rails 1.2-stable is somewhat slower than Rails 1.1-stable,

especially on action cached pages. But the slowdown I measured is nowhere near

the numbers which have been reported by

others. In

fact, two recent patches to speed up session creation and pstore

session retrieval, have resulted in improved performance for one of

the benchmarked actions.

The following performance data table shows the speed difference for

the fastest availabe configuration for the tested application.

| empty |

11.44034 | 10.91962 |

437.0 | 457.9 |

2.29 | 2.18 |

1.05 |

| welcome |

13.04648 | 14.29709 |

383.2 | 349.7 |

2.61 | 2.86 |

0.91 |

| recipes |

10.83617 | 13.51635 |

461.4 | 369.9 |

2.17 | 2.70 |

0.80 |

| my_recipes |

10.80137 | 13.48035 |

462.9 | 370.9 |

2.16 | 2.70 |

0.80 |

| show |

24.16722 | 27.37878 |

206.9 | 182.6 |

4.83 | 5.48 |

0.88 |

| cat |

26.56038 | 29.88683 |

188.3 | 167.3 |

5.31 | 5.98 |

0.89 |

| cat_page5 |

27.24954 | 30.78081 |

183.5 | 162.4 |

5.45 | 6.16 |

0.89 |

| letter |

26.99067 | 30.17507 |

185.2 | 165.7 |

5.40 | 6.04 |

0.89 |

| all requests |

151.09216 | 170.43488 |

264.7 | 234.7 |

3.78 | 4.26 |

0.89 |

|

|

| |

10.42790 | 12.90426 |

120.0 | 135.0 |

6.90 | 7.57 |

0.81 |

c1: 1.1-stable, c2: 1.2-stable, r/s: requests per second, ms/r: milliseconds per request

Read the full report here.

View Comments

Posted 14 Jan 2007

Today I’ve released a new gem version (0.9.1) of railsbench. It’s mainly a bug fix release for the installation problems discovered for 0.9.0.

However, there’s also a new script called generate_benchmarks, which can be used to generate and maintain the benchmark configuration file used by railsbench.

railsbench generate_benchmarks updates the benchmark configuration file with

- a benchmark for each controller/action pair (named <controller>_<action>)

- a benchmark for each controller combining all actions of the controller into one benchmark (named <controller>_controller)

- a benchmark comprising all controllers (named all_controllers)

This should get you started benchmarking in no time ;-)

After generating the file, you will need to edit the benchmarks and replace routing placeholders (:id, :article_id, etc.) by actual values.

If you add new controllers/actions later in the development process, you can invoke railsbench generate_benchmarks again to add new items to the configuration file. The older entries will not be removed by this process.

Currently, generate_benchmarks only works for the 1.2 release candidate and edge Rails (but I’ll gladly accept patches to make it work with 1.1.6).

Happy benchmarking!

View Comments

Posted 26 Dec 2006

The latest version of railsbench contains scripts to convert raw performance data files into pretty pictures. They depend on rmagick and gruff to be installed on your system.

railsbench perf_plot <files>

will generate a chart comparing benchmark data from all files passed as arguments.

Example:

Enjoy!

View Comments

Posted 26 Dec 2006

railsbench is now available as a gem from RubyForge. I recommend all users to switch to the gem version. This move should make it much easier to maintain railsbench installations for a larger number of machines.

Installation is now as simple as sudo gem install railsbench. Or, if you have installed the 0.8.4 gem version: sudo gem update railsbench.

The gem release will install only one ruby driver script, named railsbench in the default ruby path; individual commands like perf_run etc. are accessible through this driver. So instead of typing

perf_run 100 "-bm=all -mysql_session"

you would use

railsbench perf_run 100 "-bm=all -mysql_session"

The perf_ command prefix is now optional, thus

railsbench run 100 "-bm=all -mysql_session"

would give identical results.

If you’re accustomed to the old syntax and find typing railsbench awkward, you could add the gem’s script directory to your search path, by adding the following code to your shell profile:

eval `railsbench path`; export PATH

This will continue to work should you upgrade railsbench to a newer version.

Update: In order to call the scripts directly, you need to issue a sudo railsbench postinstall command after installing the gem. I haven’t found a way to create a gem that sets the executable bit on anything which lives outside the bin directory and isn’t a binary executable.

View Comments

Posted 10 Nov 2006

The alert reader of my blog might have noticed that I really care about performance. Some people even have accused me of being “obsessed” with it. Very often these statements are accompanied by Tony Hoare’s famous line: “Premature optimization is the root of all evil.”, which was later repeated by Donald Knuth.

I’ve always admired the work of Hoare and Knuth and I’m well aware of the famous quote on premature optimization. And they’re absolutely right: premature optimization is evil.

However, what lots of people seem to miss out, is that the most important word in his statement is “premature”.

If you don’t give any consideration to performance, you will be sure to avoid premature optimization. But you might have made a design decision which simply cannot be reverted without huge cost at a later stage of the project.

Let me give you an example: I once was asked to evaluate a project because it had performance problems. The software was intended to be used in a call center, which requires really fast response times. It turned out that the external company hired to implement this software, had made the decision to write the UI dialogs in a custom templating language, for which they had written an interpreter. The interpreter had been written in Visual Basic, which, at that time, was an interpreter as well.

So in effect, the dialogs were running c1*c2 times slower than necessary, where c1 and c2 are the interpreter overheads. It’s probably safe to assume the software ran about 25-50 times slower than necessary. And even top of the line hardware with 2 processors wasn’t able to make the package run fast enough.

In the end, the whole project was canceled, after pouring millions of EUR into it. And the problem was solved by buying another call center wholesale. I still believe today, that if some consideration had been given to performance questions during the architectural design phase, this disaster could have been avoided.

So, yes, premature optimization is evil, but no consideration for performance issues is just as bad.

Update: BTW, I was prompted to write this little piece after reading a blog entry

about a talk delivered at the Web 2.0 conference. It clearly shows that speed matters down to sub second resolution, even for web applications. I wouldn’t be surprised, if a well known UI axiom gets proven to hold in web context as well: response time should be below 100ms for graphical user interfaces. Making it faster is almost unnoticeable to the end user, but slower than 100ms does have negative effects.

View Comments